After performing dismally in the Kaggle RSNA Intracranial Haemorrhage Competition thanks to a pig-headed strategy and too little thinking I resolved to see what the winners had done right. This series posts will cover what I learned looking at the code shared by the 2nd placed team, who’s solution I found both approachable and innovative, hope you enjoy.

Part 2 of this series will give an overview of the data preparation techniques that the 2nd place solution used.

Here are the Jupyter Notebooks to accompany these posts are here

Part 1 of the series is here

Competition Overview

The goal of the competition was to classify whether each images from CT scans contained 1 or more of 5 different Intracranial Haemorrhage type, plus a 6th class called “any” which was positive if any of the other 5 classes were positive.

The 5 different haemorrhage types to identify

In this post I’ll run through how team NoBrainer prepared their data. I have lifted the team's code from their excellent Github repo and added it into a series of notebooks so that I could break down individual blocks of logic.

[The first notebook can be found here]. Note that I don't aim to rewrite any of the core logic, some small parts of the code were tweak to enable some rapid testing. In this post we’ll cover:

Rescaling

Windowing

DICOM to JPEG

Rescaling

These CT scans have pixel values measured in Hounsfield units, which can have negative values, are commonly stored with an unsigned integer. This means that the values need to be rescaled using the Intercept and Slope stored in the DICOM's metadata before we can treat them as we do other more common image formats. The rescaling function is very straightforward:

However, Jeremy Howard's Some DICOM Gotchas to be Aware Of notebook highlights some of the unexpected and unusual pixel distributions some of the DICOM images have and also provides code to correct for these. I would really recommend diving into this notebook (and its companions) to fully understand the pitfalls of this competitions’ DICOM data. From my reading of team NoBrainer’s code their rescaling probably suffered due to some of the anomalies pointed out in the Gotchas notebook.

2. Windowing

BACKGROUND

The need for windowing arises because of our eye's limited ability to detected different intensities of light and dark, explained in the RSNA IH Detection - EDA notebook on Kaggle:

Hounsfield units are a measurement to describe radiodensity

Different tissues have different HUs

Our eye can only detect ~6% change in greyscale (16 shades of grey)

Given 2000 Hounsfield Units (HU) of one image (-1000 to 1000), this means that 1 greyscale covers 8 HUs

Consequently if these is a change of 120 HU (2000 / 16) unit our eye is able to detect an intensity change in the image

A haemorrhage in the brain might show relevant HU in the range of 8-70. We won't be able to see important changes in the intensity to detect the haemorrhage

This is the reason why we have to focus 256 shades of grey into a small range/window of HU units

The “level” means where this window is centered

A window is a range of Hounsfeld units which the image constrained to. It is expressed as : (window level, window width). A window of (75, 60) means that when converting the image to 256 greyscale for inspection by the radiologist, pixel values at or below 45 (75 minus 30) are set to 0 (i.e. black) and pixel values at or higher than 105 (75 + 30) are set to 255 (i.e. white), i.e. a min and max are set for the HUs in the image.

CODE

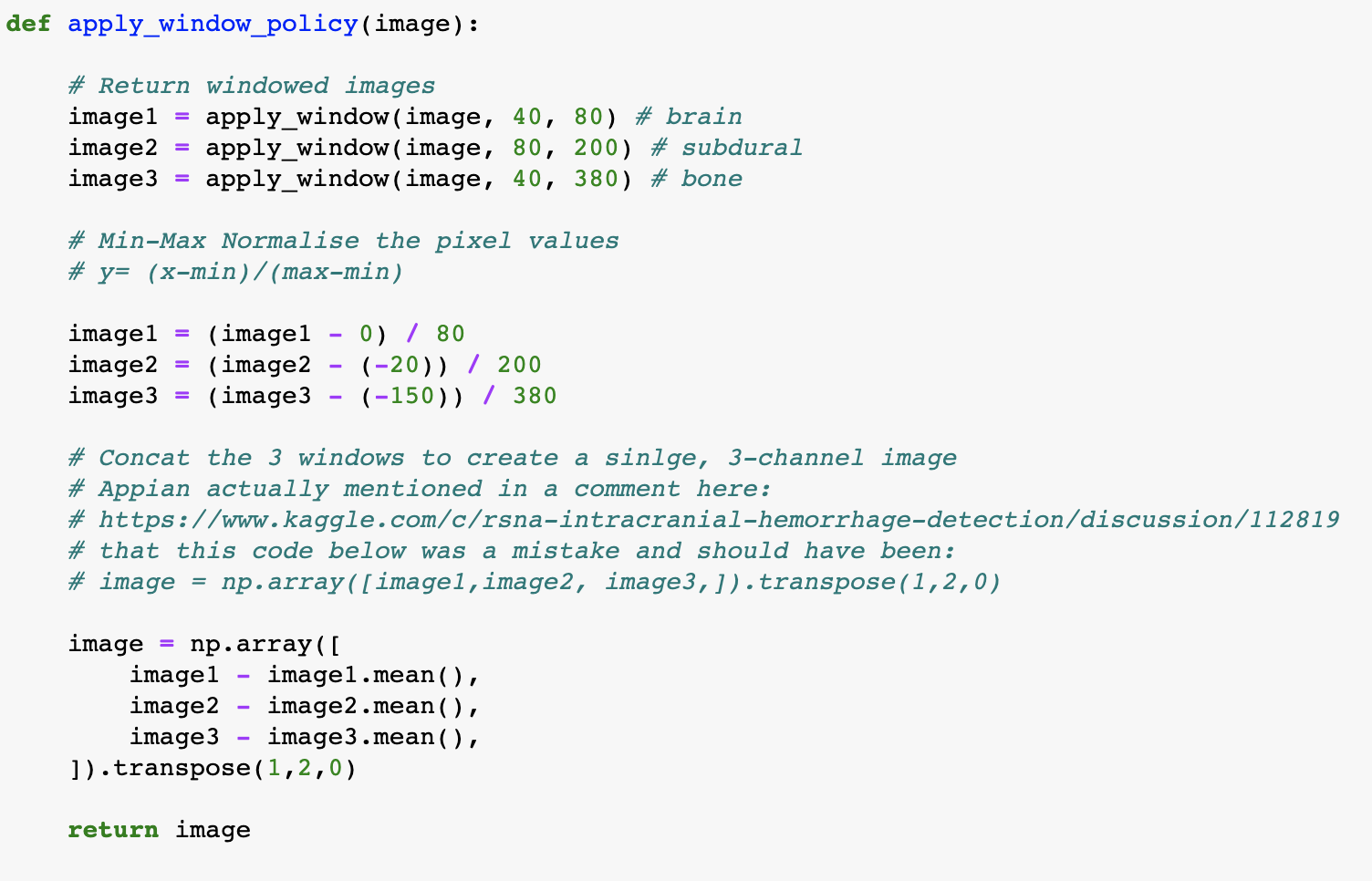

The windowing used in the 2nd place solution was originally shared by kaggle user @appian as part of an end-to-end code dump* :

The windowing policy in @appian’s code that he shared

[There was a lot of controversy around this choice to release ready to run, high scoring code while the the competition was in play. The community was split; less experienced kagglers were grateful for the clean code to learn from while more experienced/competitive kagglers were furious that a novice would then be able to copy-paste this code and achieve strong scores. By the end of the competition just relying on Appian's code wouldn't have earned you a medal, but at the time it was released it would have placed you in the high bronze medal range in the public leaderboard.]

Windowing Output

The windowing function creates a 3-channel image from the 1-channel DICOM data, where each channel covers a different window range, typical to how a radiologist might focus on different windows when assessing an image. The window ranges used were Brain (40, 80), Subdural (80, 200) and Bone (40, 380). By creating 3-channel images it also enabled participants to easily use existing image classification models without any modification to the architecture at the input.

Each channel in the new 3 channel image

3. DICOM to JPEG

Many participants in the competition opted to do a one-off conversion of the images from DICOM format to JPEG to enable them to easily use their existing data processing tools. The conversion also means that the saved images were smaller and so faster to process and train with. Given the large size of the dataset (100GB+) any speed ups in processing and training were super valuable in enabling faster experimentation.

pydicom was the python library used to open DICOM images and extract the necessary metadata to enable rescaling. The below function wraps up the reading, rescaling and windowing functions and saves the image.

That covers the main parts of the data preparation, check out my notebook for the full A-Z. In the next post I’ll cover data augmentation and training the image classifier.